Supporting Services

September 28, 2021

Introduction

This is part 2 in our 3 part series providing step by step instructions for installing and deploying IBM Maximo Manage for MAS.

In this series we provide detailed instructions to install and deploy IBM Maximo Manage for MAS. To make the process more approachable and make sure each step is demystified, we have broken down the installation into three sections.

- Installing the OpenShift infrastructure (OKD)

- Deploying the supporting services: Behavior Analytics Service (BAS), Suite Licensing Services (SLS) and MongoDB

- Deploy and configure MAS and Maximo Manage

For our examples, we are using DigitalOcean (https://digitalocean.com) as our cloud provider. They are a cost-effective alternative to AWS, Azure, or GCP and have been very supportive of our efforts deploying Maximo in their environment.

We are also using OKD (https://www.okd.io), which is the community edition of OpenShift. While OKD provides a fully functioning OpenShift instance, it does not come with the same support levels as the commercial OpenShift product from IBM and may not be appropriate for production deployments.

In step 5 of Part 1, we cloned the Sharptree scripts repository from GitHub. The scripts have been updated to support Part 2. If you had previously cloned the repository, make sure to pull the latest version by navigating to your local copy and running the command below.

git pull https://github.com/sharptree/do-okd-deploy-mas8.git

Updated May 9th, 2022 Updated for MAS 8.6 and forcing SLS version 3.2.0.

Part 2 - Deploying the supporting services: Behavior Analytics Service (BAS), Suite Licensing Services (SLS) and MongoDB

In Part 1 of our series we installed OpenShift Community Edition (OKD) on DigitalOcean. In this part we will install the prerequisites and dependencies for deploying Maximo Manage, including the Maximo Suite operator, Behavior Analytics Service, Suite Licensing Service, and MongoDB.

Configure Operator Catalogs and Access Secrets

IBM Catalog Source

To access software within the IBM operator catalog you will need your IBM entitlement key, which can be found at https://myibm.ibm.com/products-services/containerlibrary.

Manual Configuration

Create a new docker-registry secret named

ibm-entitlement-keyin the default namespace. The replace thedocker-passwordvalue with your entitlement key.oc create secret docker-registry ibm-entitlement-key \--docker-username=cp \--docker-password=YOUR_ENTITLEMENT_KEY \--docker-server=cp.icr.io \--namespace=defaultRun the following command to create the IBM operator catalog source:

oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: CatalogSourcemetadata:name: ibm-operator-catalognamespace: openshift-marketplacespec:displayName: "IBM Operator Catalog"publisher: IBMsourceType: grpcimage: docker.io/ibmcom/ibm-operator-catalogupdateStrategy:registryPoll:interval: 45mEOF

Scripted Configuration

Ensure you have a valid

ocsession by callingoc login. After obtaining the IBM entitlement key run the following command../scripts/create-ibm-catalog.sh ENTITLEMENT_KEY_HERE

Redhat Certified Catalog Source

To install the required MongoDB and Behavior Analytics Service operators the Redhat certified catalog must be installed. You will need a RedHat account, which you can sign up for here: https://www.redhat.com/wapps/ugc/register.html

Manual Configuration

To access the RedHat certified catalog source you will need to establish valid pull secrets. To do this, visit https://access.redhat.com/terms-based-registry/, and create a new service account by clicking the New Service Account button.

Enter a name, such as OKD and an optional description then click the Create button.

Note that it may take up to an hour for RedHat to process a new token and you may see authentication errors until it fully processes. Each catalog source will create a corresponding Pod under the

openshift-marketplacenamespace. You can view the events for the Pod to troubleshoot errors.After the service account has been created click the Docker Configuration tab and click the "view its contents" link under the Download credentials configuration tab. The value will look something like the following.

{"auths": {"registry.redhat.io": {"auth": "MTQwMjMyNDB8b2NwOmV5Smh..."}}}Copy the

registry.redhat.iokey and value and duplicate it and modify the key to beregistry.connect.redhat.comwith the JSON list like below.{"auths": {"registry.redhat.io": {"auth": "MTQwMjMyNDB8b2NwOmV5Smh..."},"registry.connect.redhat.com": {"auth": "MTQwMjMyNDB8b2NwOmV5Smh..."}}}Run the following command to create the RedHat pull secret:

oc apply -f - >/dev/null <<EOFapiVersion: v1kind: Secretmetadata:name: redhat-entitlement-keynamespace: openshift-marketplacestringData:.dockerconfigjson: |{"auths": {"registry.redhat.io": {"auth": "MTQwMjMyNDB8b2NwOmV5Smh..."},"registry.connect.redhat.com": {"auth": "MTQwMjMyNDB8b2NwOmV5Smh..."}}}type: kubernetes.io/dockerconfigjsonEOFRun the following command to create the RedHat certified operator catalog source. Note that the

secretskey value matches the name of the secret created in the previous step.oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: CatalogSourcemetadata:name: certified-operatorsnamespace: openshift-marketplacespec:displayName: Certified Operatorsimage: 'registry.redhat.io/redhat/certified-operator-index:v4.8'secrets:- redhat-entitlement-keypriority: -400publisher: Red HatsourceType: grpcupdateStrategy:registryPoll:interval: 10m0sEOFRun the following command to create the RedHat operator catalog source. Note that the

secretskey value matches the name of the secret created in the previous step.oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: CatalogSourcemetadata:name: redhat-operatorsnamespace: openshift-marketplacespec:displayName: RedHat Operatorsimage: 'registry.redhat.io/redhat/redhat-operator-index:v4.8'secrets:- redhat-entitlement-keypriority: -400publisher: Red HatsourceType: grpcupdateStrategy:registryPoll:interval: 10m0sEOFRun the following command to create the RedHat marketplace operator catalog source. Note that the

secretskey value matches the name of the secret created in the previous step.oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: CatalogSourcemetadata:name: redhat-marketplace-operatorsnamespace: openshift-marketplacespec:displayName: RedHat Marketplace Operatorsimage: 'registry.redhat.io/redhat/redhat-marketplace-index:v4.8'secrets:- redhat-entitlement-keypriority: -400publisher: Red HatsourceType: grpcupdateStrategy:registryPoll:interval: 10m0sEOFRun the following command to create the RedHat community operator catalog source. Note that the

secretskey value matches the name of the secret created in the previous step.oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: CatalogSourcemetadata:name: redhat-community-operatorsnamespace: openshift-marketplacespec:displayName: RedHat Community Operatorsimage: 'registry.redhat.io/redhat/community-operator-index:v4.8'secrets:- redhat-entitlement-keypriority: -400publisher: Red HatsourceType: grpcupdateStrategy:registryPoll:interval: 10m0sEOFTo update the cluster pull secrets to allow access to all

Scripted Configuration

To access the RedHat certified catalog source you will need to establish valid pull secrets. To do this, visit https://access.redhat.com/terms-based-registry/, and create a new service account by clicking the New Service Account button.

Enter a name, such as OKD and an optional description then click the Create button.

After the service account has been created click the Docker Configuration tab and click the "view its contents" link under the Download credentials configuration tab. The value will look something like the following.

{"auths": {"registry.redhat.io": {"auth": "MTQwMjMyNDB8b2NwOmV5Smh..."}}}Copy the

authvalue, in this exampleMTQwMjMyNDB8b2NwOmV5Smh..., this is the token that is requested by the script.After obtaining the RedHat access token run the following command.

./scripts/create-redhat-catalog.sh REDHAT_ACCESS_TOKEN_HERE

Update Global Pull Secret

The OKD cluster installs with fake credentials for the global pull credentials, these need to be updated to valid credentials so images can be pulled from RedHat and IBM.

Manual Configuration

get your IBM credentials that were created earlier with the following command.

oc get secret ibm-entitlement-key -o yaml | yq -r .data | cut -d : -f 2 | sed -e 's/"//g' -e 's/{//g' -e 's/}//g' -e 's/ //g' | base64 -dThe output should looks something like the following.

{"auths":{"cp.icr.io":{"username":"cp","password":"eyJhbGciOiJIU...","auth":"Y3A6ZXlKaGJHY2lP..."}}}Take the RedHat configuration you created earlier and add the

cp.icr.ioand theauthkey and value similar to below, then create a file calledopenshift-config-pull-secret.jsonwith the value.{"auths": {"registry.redhat.io": {"auth": "MTQwMjMyND..."},"registry.connect.redhat.com": {"auth": "MTQwMjMyND..."},"cp.icr.io":{"auth": "Y3A6ZXlKaG..."}}}Update the global secrets with the following command.

oc set data secret/pull-secret -n openshift-config --from-file=.dockerconfigjson=./openshift-config-pull-secret.jsonThis will restart the administrative pods and may take several minutes to complete.

Scripted Configuration

- Run the following command../scripts/update-global-pull-secret.sh

Configure Storage Classes

The digitalocean-okd-install.sh script should automatically create a csi-s3-s3fs storage class. However, during testing this sometimes failed so it is good to check. Run the following command and verify that the storage class was created. If not, follow the steps after to create it.

oc get storageclass

Configure DigitalOcean Spaces (S3) Storage

The Behavior Analytics Service (BAS) requires Read Write Multiple (RWX) support, which the DO block storage does not provide. To add support for RWX we need to configure a storage class that uses DO Spaces. Following this article https://medium.com/asl19-developers/create-readwritemany-persistentvolumeclaims-on-your-kubernetes-cluster-3a8db51f98e3 we will create and configure a new storage class.

Log into the Digital Ocean web console with administrative privileges.

Select Spaces from the left hand navigation menu, then click the Manage Keys button on the top right of the screen.

Under the Spaces access keys section click the Generate New Key button.

Name the new key

okd-csiand click the green checkbox next to the name to create the new key.The key and its secret will now be displayed. Make sure you record both of these as the secret will only be displayed this once and cannot be recovered.

From the command line, execute the following to check out the

csi-s3projects.git clone https://github.com/ctrox/csi-s3.gitCreate a secret in the

kube-systemnamespace for the spaces key and secret. Run the following command with the values replaced for your key, secret and deployment region to create the secret.oc apply -f - >/dev/null <<EOFapiVersion: v1kind: Secretmetadata:name: csi-s3-secretnamespace: kube-systemstringData:accessKeyID: [YOUR_DO_SPACES_ACCESS_KEY_ID]secretAccessKey: [YOUR_DO_SPACES_SECRET_ACCES_KEY]endpoint: "https://[YOUR_DO_REGION].digitaloceanspaces.com"region: ""encryptionKey: ""EOFChange directories to the the

csi-s3/deploy/kubernetesdirectory, here you will find the attacher.yaml, provisioner.yaml, and csi-s3.yaml configuration files. Execute the following commands to create the required configurations.oc create -f provisioner.yamloc create -f attacher.yamloc create -f csi-s3.yamlChange directories to the

examplesdirectory undercsi-s3/deploy/kubernetes. Open thestorageclass.yamlfile in a text editor. Edit the mounter entry fromrclonetogoofys, then save and close the file.Execute the following command to create the storage class.

oc create -f storageclass.yamlNote that when a PVC is removed the underlying bucket is not deleted so you will need to manually clean up unused buckets in the do web console.

Install Cert Manager

Although the cert-manager is available from the Operator Hub the IBM Suite License Service requires an older version and installing the new 1.5.4 release will not have the expected CR tags.

From the command line run the following command.

oc create namespace cert-manager

From the command line change to use the new project.

oc project cert-manager

From the command line run the following command to install the cert-manager operator.

oc apply -f https://github.com/jetstack/cert-manager/releases/download/v1.1.0/cert-manager.yaml

Configure Let's Encrypt CA

Let's Encrypt provides a free, public ACME certificate issuing service an the Cert Manager provides support for ACME certificate management. Cert Manager also provides support for integrated DNS certificate verification using the Digital Ocean DNS service. The following steps create a new ClusterIssuer CRD for the Let's Encrypt services.

Cert Manager DNS Verification Documentation: https://cert-manager.io/docs/configuration/acme/dns01/

Cert Manager Digital Ocean Provider: https://cert-manager.io/docs/configuration/acme/dns01/digitalocean/

Run the following script using the

DIGITALOCEAN_ACCESS_TOKENfrom the initial installation:./scripts/create-letsencrypt-cluster-issuer.sh --token [DIGITALOCEAN_ACCESS_TOKEN]If you do not provide the access token, you will be prompted to provide it from the command line.

Install Maximo Application Suite 8.6

Create a new project named mas-INSTANCEID-core where INSTANCEID is the name of the deployment instance, in our example we will use sharptree.

Run the following command to create the project.

oc create namespace mas-INSTANCEID-core

We will now create a secret for the entitlement. Although the entitlement has already been created and is part of the global pull secrets, the IBM documentation requires this and we do not want to deviate from the IBM documentation.

Run the following command, replacing ENTITLEMENT_KEY with your IBM entitlement key that was obtained in the prior steps.

oc -n mas-INSTANCEID-core create secret docker-registry ibm-entitlement --docker-server=cp.icr.io --docker-username=cp --docker-password=ENTITLEMENT_KEY

Log into the ODK web console with administrator privileges. Under the Operators menu, select the Operator Hub. Search for IBM Maximo Application Suite and then select the IBM Maximo Application Suite entry. Click the Install button to bring up the installation details, select the 8.6.x channel and then select the namespace mas-INSTANCEID-core, then click the Install button to perform the install. The installation may take several minutes to complete as images are pulled and deployed.

Note it is critical that you select the 8.6.x channel and not 8.7.x as the 8.7.x dependencies do not currently deploy correctly. When this is fixed we will update this guide.

Once the operator has been created run the following command, replacing mas-INSTANCEID-core with your project name and YOUR_DOMAIN with the deployment domain, to create the base Suite CRD.

oc apply -f - >/dev/null << EOFapiVersion: core.mas.ibm.com/v1kind: Suitemetadata:name: INSTANCEIDlabels:mas.ibm.com/instanceId: INSTANCEIDnamespace: mas-INSTANCEID-corespec:certificateIssuer:duration: 87600hname: letsencryptrenewBefore: 360hdomain: YOUR_DOMAINlicense:accept: trueEOF

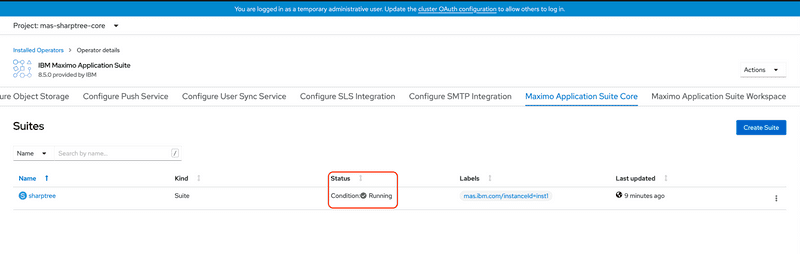

Wait until the Suite instance status is in a condition of Running as shown in the screenshot below and the INSTANCEID-credentials-superuser is available. Note that similar to many other operations, this may take several minutes to complete.

Install the Service Binder Operator

The Maximo Application Suite version 8.6 required the 0.8 version of the Service Binder Operator, later versions were not compatible. Details of the issue can be found here https://www.ibm.com/support/pages/ibm-maximo-application-suite-84x-and-85x-require-service-binding-operator-08.

Create the Service Binder Operator subscription with the installPlanApproval configured to Manual as shown below.

oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: Subscriptionmetadata:name: rh-service-binding-operatornamespace: openshift-operatorsspec:channel: previewname: rh-service-binding-operatorsource: redhat-operatorssourceNamespace: openshift-marketplaceinstallPlanApproval: ManualstartingCSV: service-binding-operator.v0.8.0EOF

Find the install plan run the following command which will print the install plan name to the console.

installplan=$(oc get installplan -n openshift-operators | grep -i service-binding | awk '{print $1}'); echo "installplan: $installplan"

Finally, approve the install plan with the following command, replacing [install_plan_name] with the name from the previous step.

oc patch installplan [install_plan_name] -n openshift-operators --type merge --patch '{"spec":{"approved":true}}'

Install MongoDB Operator

Manual Configuration

Create a new namespace named mongodb with the following command.

oc create namespace mongodb

Create the Custom Resource Definitions (CRD) with the following command.

oc create -f https://github.com/mongodb/mongodb-enterprise-kubernetes/raw/master/crds.yaml

Create the MongoDB Operator with the following command.

oc create -f https://github.com/mongodb/mongodb-enterprise-kubernetes/raw/master/mongodb-enterprise-openshift.yaml

Run the following command, replacing the REPLACE_WITH_YOUR_PASSWORD value with your own password for the opsman user. Note that Ops Manager has strict password requirements, if you use a weak password the ops manager user will fail to create. Make sure you choose a password that is at least 12 characters, has upper and lowercase and two special characters.

oc apply -f - >/dev/null <<EOFapiVersion: v1kind: SecretstringData:FirstName: OperationsLastName: ManagerPassword: REPLACE_WITH_YOUR_PASSWORDUsername: opsmantype: Opaquemetadata:name: opsman-admin-credentialsnamespace: mongodbEOF

Run the following command, replacing the REPLACE_WITH_YOUR_PASSWORD value with your own password for the opsman-db-password.

oc apply -f - >/dev/null <<EOFapiVersion: v1kind: SecretstringData:password: REPLACE_WITH_YOUR_PASSWORDtype: Opaquemetadata:name: opsman-db-passwordnamespace: mongodbEOF

Run the following command, replacing the YOUR_EMAIL value with your own email address to create a new instance of the Mongo Ops Manager.

oc apply -f - >/dev/null <<EOFapiVersion: mongodb.com/v1kind: MongoDBOpsManagermetadata:name: ops-managernamespace: mongodbspec:adminCredentials: opsman-admin-credentialsapplicationDatabase:logLevel: INFOmembers: 3passwordSecretKeyRef:name: opsman-db-passwordpersistent: trueproject: mongodbtype: ReplicaSetversion: 5.0.1-entbackup:enabled: falseconfiguration:mms.fromEmailAddr: YOUR_EMAILexternalConnectivity:type: LoadBalancerreplicas: 1version: 5.0.1EOF

Note it may take several minutes for everything to come up. The ops-manager-0 pod will perform a migration as it reconciles its members as part of the initial start up, this process can take 10 minutes or more. This must be complete before moving on to the next steps.

Once everything is has be deployed and verified navigate to Networking > Routes on the left side menu.

Click the Create Route button.

Click the Edit YAML link on the top right and paste the following YAML, entering your edge certificate, key, and CA values and updating the CLUSTER_NAME and DOMAIN values with your user's name and domain values. Enter your Certificate, Key and CA certificates. Make sure you note the proper indentation as required by YAML.

Once complete click the Create button to create the route.

kind: RouteapiVersion: route.openshift.io/v1metadata:name: ops-managernamespace: mongodblabels:app: ops-manager-svccontroller: mongodb-enterprise-operatorannotations:openshift.io/host.generated: 'true'spec:host: ops-manager-mongodb.apps.CLUSTER_NAME.DOMAINto:kind: Servicename: ops-manager-svcweight: 100port:targetPort: mongodbtls:termination: edgecertificate: |YOUR_CERTIFICATEkey: |YOUR_KEYcaCertificate: |YOUR_CAinsecureEdgeTerminationPolicy: RedirectwildcardPolicy: None

Setup MongoDB

Using a web browser go to https://ops-manager-mongodb.apps.[CLUSTER_NAME].[DOMAIN] where [CLUSTER_NAME] is your cluster name and [DOMAIN] is the cluster name and domain. The Ops Manager login should be displayed.

Log into the Ops Manager with the opsman-admin-credentials credentials you created earlier.

Enter the required values, note that you will need details for a valid SMTP server as part of the first page of questions. Click the Continue button, then review the security settings and adjust as desired or just accept the defaults and click Continue again, answer the question about data collection and click Continue, continue to click Continue through the remaining screens accepting the defaults.

Depending on the current version you may receive a message that your agents are out of date. If this is the case you can update your deployment spec with the latest version and OpenShift will update to the latest version. Note that upgrading may take several minutes to complete.

Create MongoDB Organization

Log into the MongoDB Ops manager with the admin user that was created in the previous section.

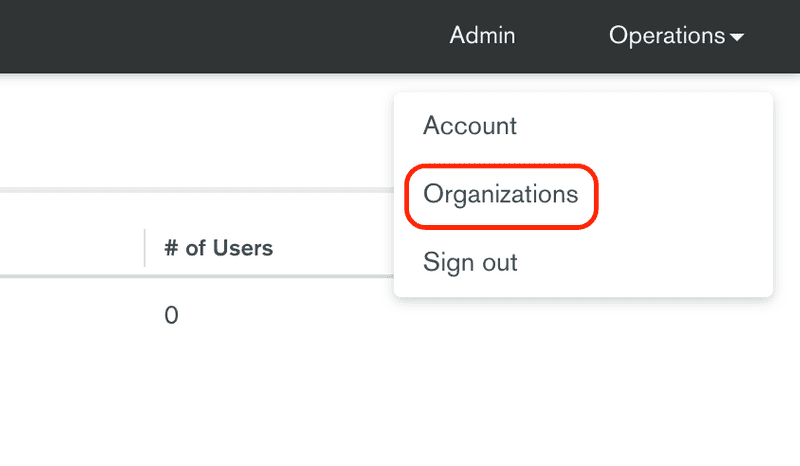

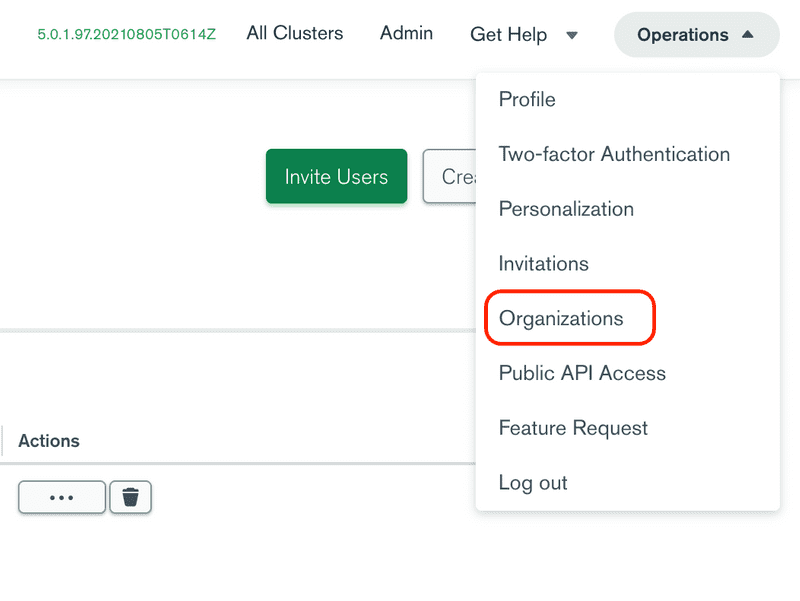

Click the Operations menu on the top right of the screen and then select the Organizations menu item.

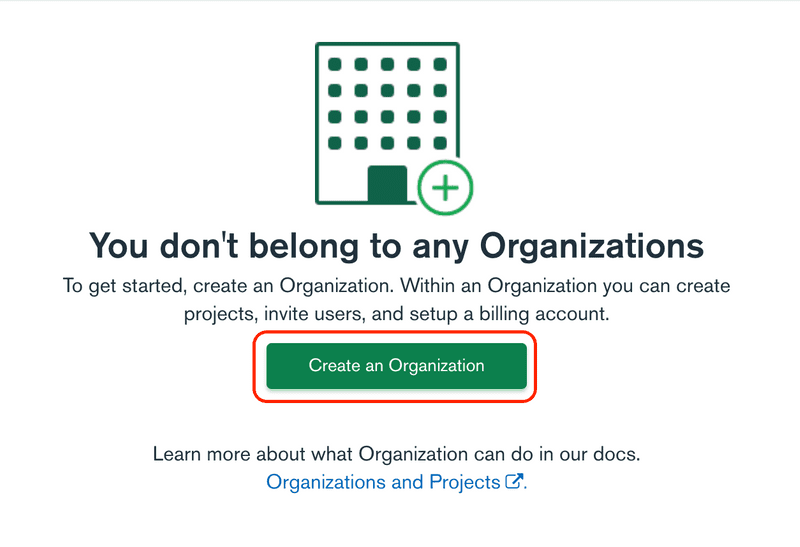

Click the Create New Organization button.

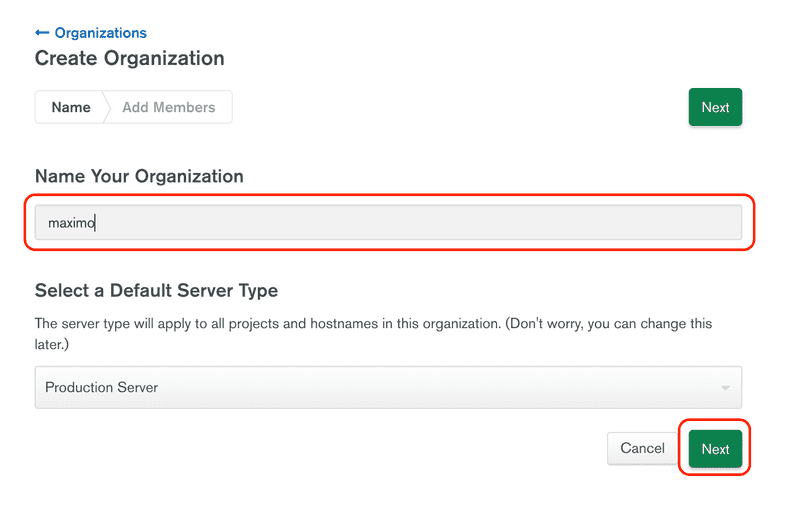

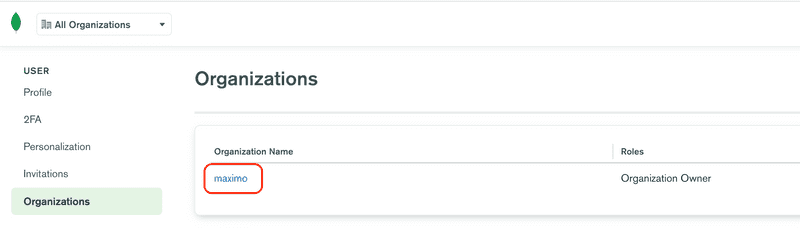

Enter a new organization name, for the example we are using maximo, but you can use whatever makes sense for you. Once the name has been entered, click the Next button.

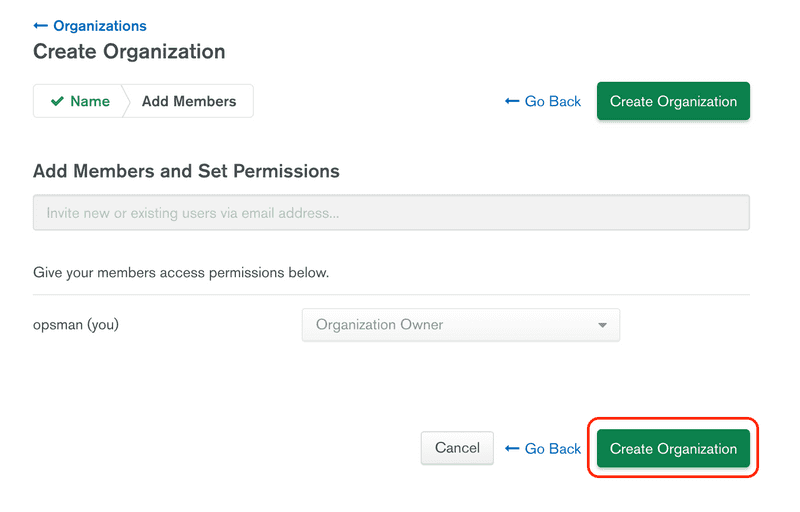

On the next screen we will add members later, so click the Create Organization button to complete the process.

Create API Keys

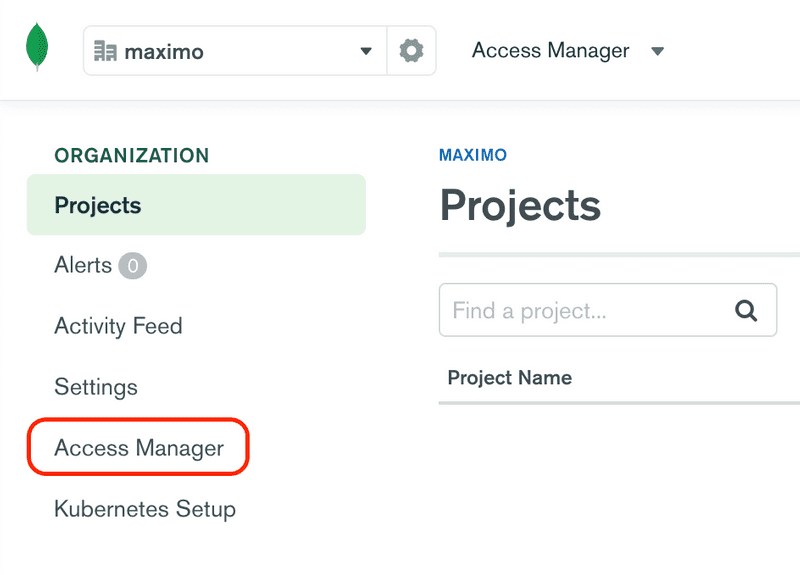

We are going to create a project for IBM Suite Licensing Service (SLS). From the Organization page in the Ops Manager, click the Access Manager menu item from the left side menu.

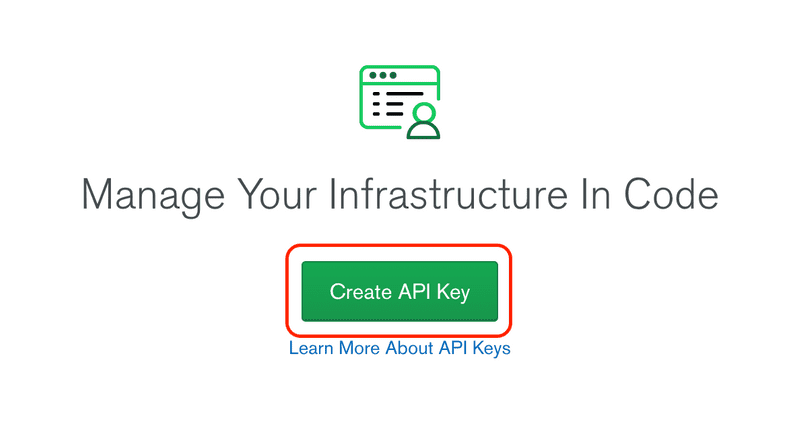

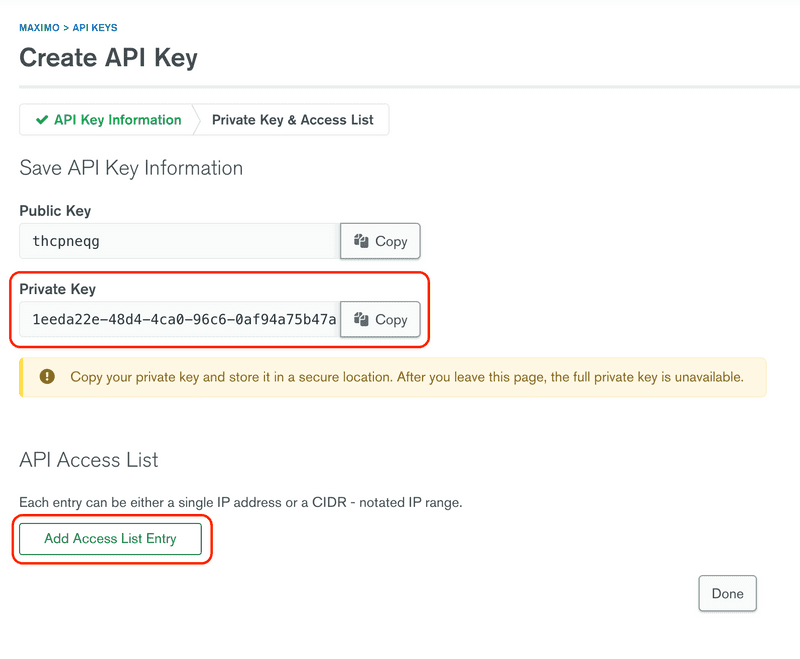

Click the API Keys tab and then click the Create API Key button.

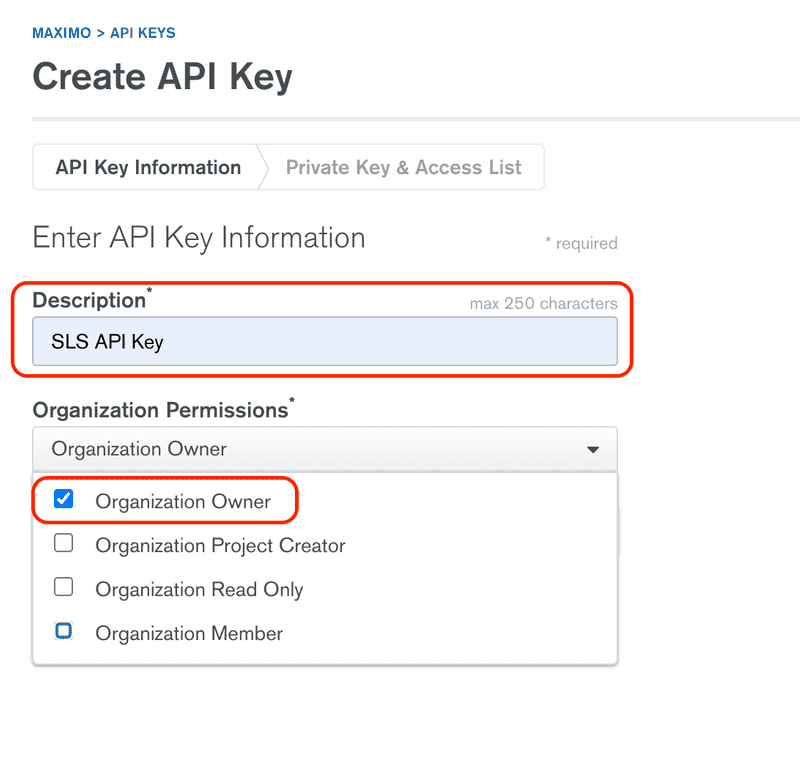

Enter a Description (SLS API Key) and then select Organization Owner for Organization Permissions, then click the Next button.

On the next screen the private API key will be displayed. This is the only time that the key will be displayed, it is unrecoverable, so make sure you copy it to a safe place before continuing. Once you have saved the private API key, click the Add Whitelist Entry button.

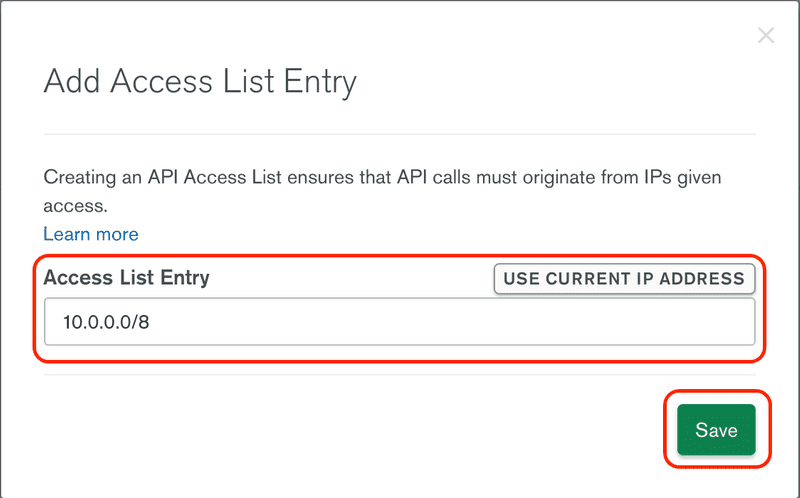

The whitelist determines the source IP addresses that can use the API key. For our example we are going to be permissive and allow anything on the internal network to access the database. You may way to be more narrow in your permissions. In this case enter 10.0.0.0/8, which is a CIDR rule that covers all addresses in the 10... space. Click the Save* button to continue.

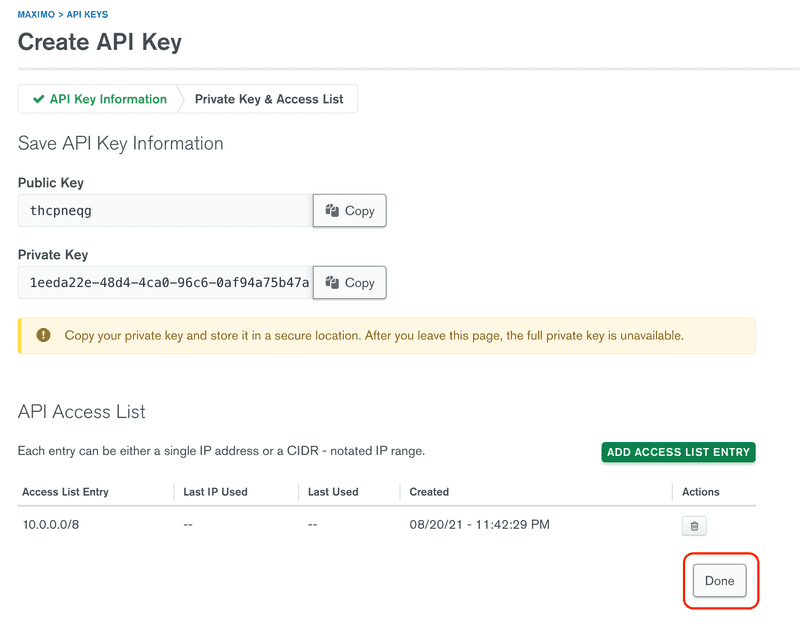

Review the information as entered and click Done to continue.

Repeat the previous steps, using MAS API Key for the description. Be certain to note the Public and Private Key values.

Create MongoDB Deployment

We are going to create one deployment for IBM SLS and one for IBM MAS.

Create a text file called

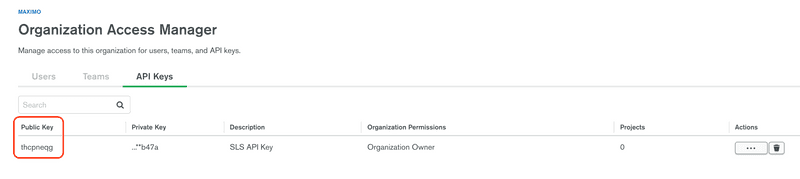

ibm-sls-cred.yamland paste example YAML below with the user and publicApiKey values replaced with the values from the SLS steps before. The user value is the Public Key value from when you created the SLS API Key in the previous section. The screen shot below shows where it is displayed.Run the following command, replacing the PUBLIC_KEY and PRIVATE_KEY values from when you created the API Key, to create the

ibm-sls-api-keysecret:oc apply -f - >/dev/null <<EOFapiVersion: v1kind: Secretmetadata:name: ibm-sls-api-keynamespace: mongodbstringData:user: PUBLIC_KEYpublicApiKey: PRIVATE_KEYtype: OpaqueEOFNow run the following command, replacing the PUBLIC_KEY and PRIVATE_KEY values from when you created the API Key, to create the

ibm-mas-api-keysecret:oc apply -f - >/dev/null <<EOFapiVersion: v1kind: Secretmetadata:name: ibm-mas-api-keynamespace: mongodbstringData:user: PUBLIC_KEYpublicApiKey: PRIVATE_KEYtype: OpaqueEOFNext create a ConfigMap for mapping the MongoDB Organization to the deployment. You will need to get the MongoDB Organization ID from the MongoDB Ops Manager.

Click the Operations menu on the top right of the screen and select the Organizations.

Then select your Organization entry, in this example it is maximo.

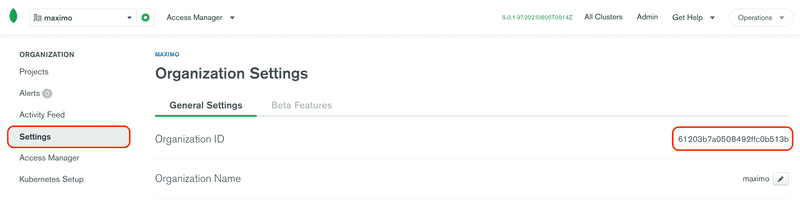

Click the Settings menu item from the left side navigation menu and then note the Organization ID. This is the value you need for the

orgIdvalue in the YAML below.Run the following command, replacing the

ORGANIZATION_IDwith the Organization ID obtained above, to create theibm-sls-configConfigMap in themongodbnamespace.oc apply -f - >/dev/null <<EOFapiVersion: v1kind: ConfigMapmetadata:name: ibm-sls-confignamespace: mongodbdata:projectName: "ibm-sls"orgId: ORGANIZATION_IDbaseUrl: http://ops-manager-svc.mongodb.svc.cluster.local:8080EOFRepeat the previous steps by running the following command, replacing the

ORGANIZATION_IDwith the Organization ID obtained above, to create theibm-mas-configConfigMap in themongodbnamespace.oc apply -f - >/dev/null <<EOFapiVersion: v1kind: ConfigMapmetadata:name: ibm-mas-confignamespace: mongodbdata:projectName: "ibm-mas"orgId: ORGANIZATION_IDbaseUrl: http://ops-manager-svc.mongodb.svc.cluster.local:8080EOFRun the following command to create a new CA and certificates for the Mongo deployment to use. This requires that the Cert Manager was installed properly from the previous section. If you skipped that step, ensure that you do it now.

oc create -f ./resources/mongo-certs.yaml`Now we need to take the certificate that was created and concatenate the certs and keys in a format that Mongo can use. First, create a temporary directory, for our example we will use

mongodb.mkdir mongodbRun the following commands to extract and concatenate the certificates.

oc get secret ibm-sls-mongo-tls -o json -n mongodb | jq '.data["tls.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/ibm-sls-0-pemoc get secret ibm-sls-mongo-tls -o json -n mongodb | jq '.data["tls.key"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode >> ./mongodb/ibm-sls-0-pemoc get secret ibm-sls-mongo-tls -o json -n mongodb | jq '.data["tls.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/ibm-sls-1-pemoc get secret ibm-sls-mongo-tls -o json -n mongodb | jq '.data["tls.key"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode >> ./mongodb/ibm-sls-1-pemoc get secret ibm-sls-mongo-tls -o json -n mongodb | jq '.data["tls.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/ibm-sls-2-pemoc get secret ibm-sls-mongo-tls -o json -n mongodb | jq '.data["tls.key"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode >> ./mongodb/ibm-sls-2-pemoc get secret ibm-mas-mongo-tls -o json -n mongodb | jq '.data["tls.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/ibm-mas-0-pemoc get secret ibm-mas-mongo-tls -o json -n mongodb | jq '.data["tls.key"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode >> ./mongodb/ibm-mas-0-pemoc get secret ibm-mas-mongo-tls -o json -n mongodb | jq '.data["tls.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/ibm-mas-1-pemoc get secret ibm-mas-mongo-tls -o json -n mongodb | jq '.data["tls.key"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode >> ./mongodb/ibm-mas-1-pemoc get secret ibm-mas-mongo-tls -o json -n mongodb | jq '.data["tls.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/ibm-mas-2-pemoc get secret ibm-mas-mongo-tls -o json -n mongodb | jq '.data["tls.key"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode >> ./mongodb/ibm-mas-2-pemNow create a new secret that contains those certificates.

(cd ./mongodb && oc create secret generic ibm-sls-cert --from-file=ibm-sls-0-pem --from-file=ibm-sls-1-pem --from-file=ibm-sls-2-pem -n mongodb)(cd ./mongodb && oc create secret generic ibm-mas-cert --from-file=ibm-mas-0-pem --from-file=ibm-mas-1-pem --from-file=ibm-mas-2-pem -n mongodb)Now extract the CA that was used to create these certificates.

oc get secret ibm-sls-mongo-tls -n mongodb -o json | jq '.data["ca.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/sls-ca-pemoc get secret ibm-mas-mongo-tls -n mongodb -o json | jq '.data["ca.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decode > ./mongodb/mas-ca-pemFinally, create a ConfigMap that contains that CA that will be used by the MongoDB deployment to validate the certificates.

(cd ./mongodb && /usr/bin/cp -f sls-ca-pem ca-pem && oc create configmap ibm-sls-ca --from-file=ca-pem -n mongodb && rm -f ca-pem)(cd ./mongodb && /usr/bin/cp -f mas-ca-pem ca-pem && oc create configmap ibm-mas-ca --from-file=ca-pem -n mongodb && rm -f ca-pem)Run the following command to create the ibm-sls MongoDB project.

oc apply -f - >/dev/null <<EOFapiVersion: mongodb.com/v1kind: MongoDBmetadata:name: ibm-slsnamespace: mongodbspec:credentials: ibm-sls-api-keylogLevel: INFOmembers: 3opsManager:configMapRef:name: ibm-sls-configpersistent: truetype: ReplicaSetversion: "5.0.1-ent"security:tls:enabled: trueca: ibm-sls-caEOFThen run the following command to create the ibm-mas MongoDB project.

oc apply -f - >/dev/null <<EOFapiVersion: mongodb.com/v1kind: MongoDBmetadata:name: ibm-masnamespace: mongodbspec:credentials: ibm-mas-api-keylogLevel: INFOmembers: 3opsManager:configMapRef:name: ibm-mas-configpersistent: truetype: ReplicaSetversion: "5.0.1-ent"security:tls:enabled: trueca: ibm-mas-caEOF

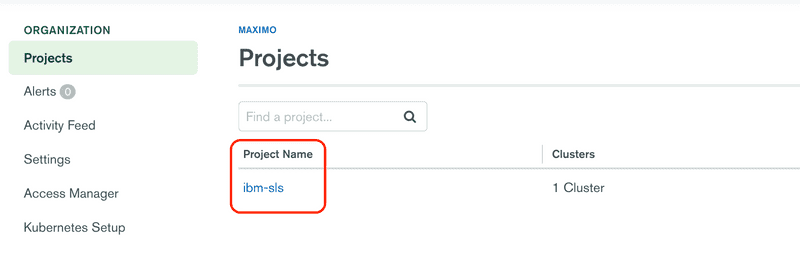

Create MongoDB Users

MongoDB users for the IBM Suite Licensing Services and Maximo Application Suite are needed for the service. Return to the MongoDB Ops Manager console and navigate to the Organization Projects. You should now see the projects ibm-sls and ibm-mas (not pictured).

For the following steps we will use the ibm-sls project.

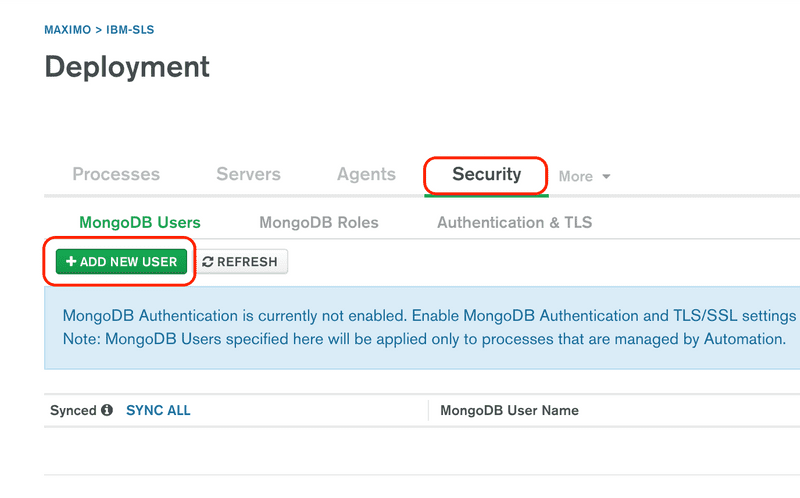

Click the

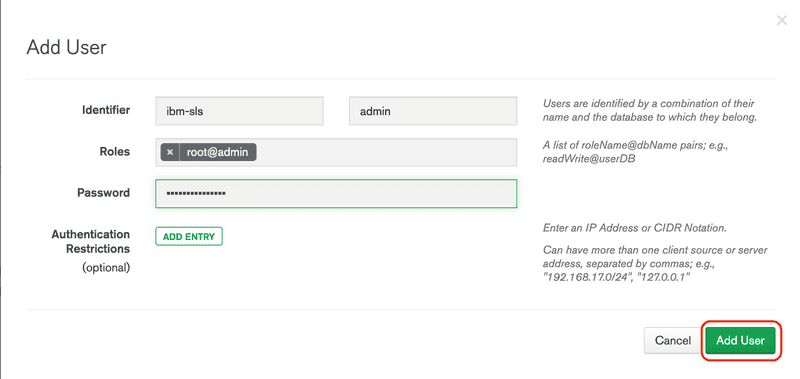

ibm-slsproject link, then click the Security tab, then click the+ ADD NEW USERbutton.For the database enter

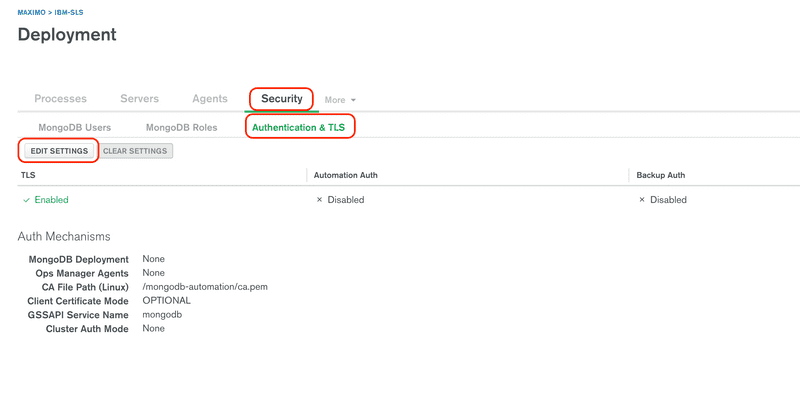

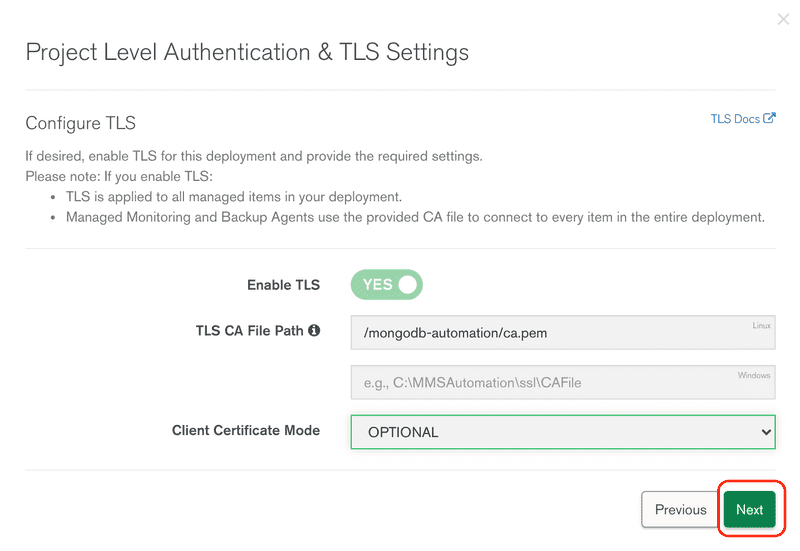

ibm-slsand for the name enteradmin, for the Roles enterroot@admin, then enter a password, be sure to save the password for later use in installing SLS, and click the Add User button.From the user list, click the Authentication & TLS tab. Then click the Edit Settings button.

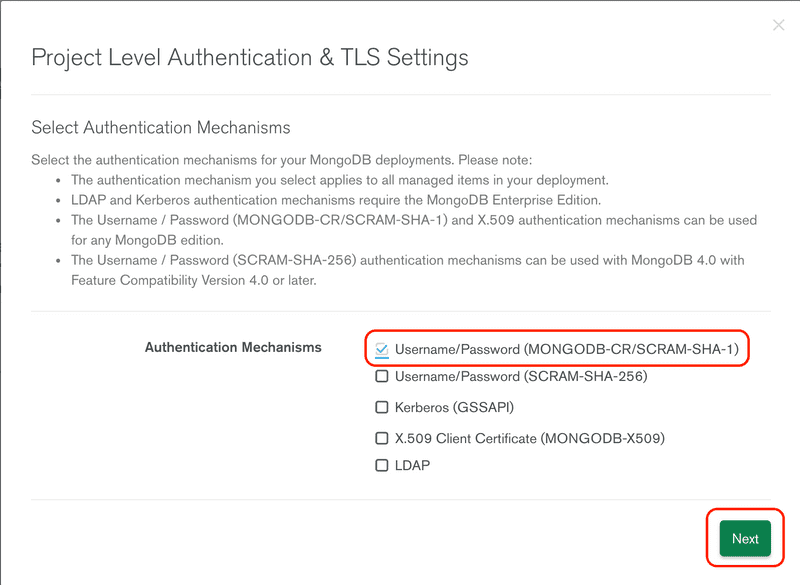

There are several authentication mechanisms, for this install we are going to use the most compatible, but also least secure option, which is SHA-1. You should choose to use a more secure method in a production environment. Click the Next button to continue.

TLS is required and cannot be turned off, click Next to move to the next step.

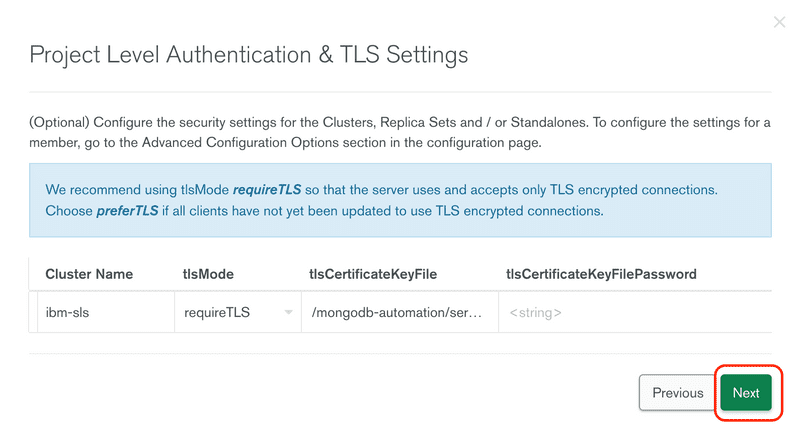

Review the project level TLS configuration.

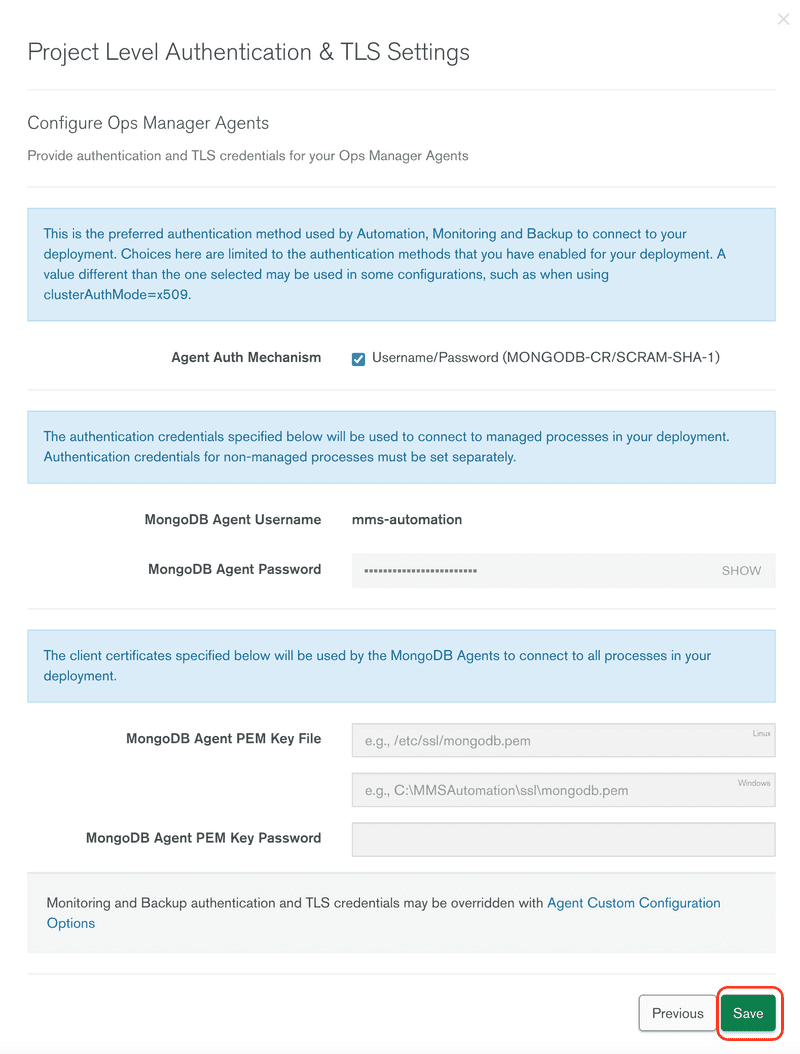

Review all the changes and click the Save button.

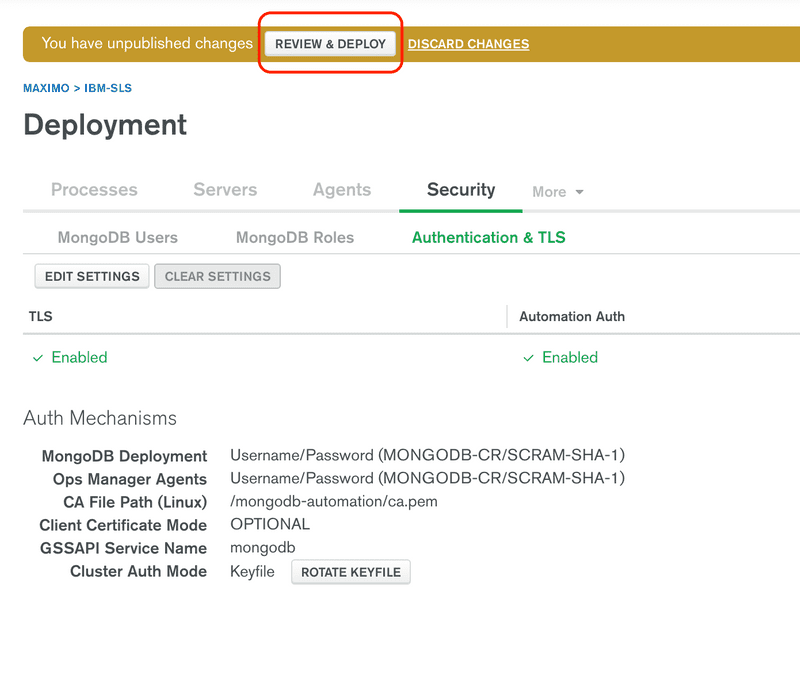

Back on the user list page, click on the REVIEW & DEPLOY button. This process can take several minutes so be patient and wait until it has successfully been deployed.

Repeat the previous steps, replacing

ibm-slswithibm-mas.

Install Behavior Analytics Services

Before installing the BAS Operator, there are some prerequisites that must be created. Make sure you perform the following steps before attempting to install BAS.

This IBM lab provides a reference for the following steps https://developer.ibm.com/openlabsdev/guide/behavior-analytics-services/course/bas-install/03.10

Create the BAS project, in this case it is called

ibm-bas, but you can select a different name if you want.oc new-project ibm-basCreate a secret named "database-credentials" for PostgreSQL, these will be used when creating the database, it is not expected that the database has already been configured.

oc create secret generic database-credentials --from-literal=db_username=[YOUR_NEW_DB_USERNAME] --from-literal=db_password=[YOUR_NEW_DB_PASSWORD] -n ibm-basCreate a secret named "grafana-credentials" to setup Grafana login credentials.

oc create secret generic grafana-credentials --from-literal=grafana_username=[YOUR_NEW_GRAFANA_USERNAME] --from-literal=grafana_password=[YOUR_NEW_GRAFANA_PASSWORD] -n ibm-basSearch for Behavior Analytics Services and then click the Behavior Analytics Services entry.

Click the Install button on the top left of the operator details panel.

Select the

ibm-basnamespace to install the operator and accept the defaults, then click the Install button.The installation process can take several minutes to complete, make sure to wait until it is complete.

Once the installation is complete, click the View Operator button to go to the newly installed operator.

Run the following command to create an Full Deployment

oc apply -f - >/dev/null <<EOFapiVersion: bas.ibm.com/v1kind: FullDeploymentmetadata:name: maximonamespace: ibm-basspec:db_archive:frequency: '@monthly'retention_age: 6persistent_storage:storage_class: csi-s3-s3fsstorage_size: 10Gprometheus_scheduler_frequency: '@daily'airgapped:enabled: falsebackup_deletion_frequency: '@daily'backup_retention_period: 7image_pull_secret: bas-images-pull-secretkafka:storage_class: do-block-storagestorage_size: 5Gzookeeper_storage_class: do-block-storagezookeeper_storage_size: 5Genv_type: liteprometheus_metrics: []event_scheduler_frequency: '@hourly'ibmproxyurl: 'https://iaps.ibm.com'allowed_domains: '*'postgres:storage_class: do-block-storagestorage_size: 10GEOF

- The installation process make take more than 20 minutes to complete, be patient as it creates the operators for Kafka and Postgres. It is very important to make sure that Full Deployment status goes from

InstallingtoReady. We have observed that sometimes the Full Deployment will change to a status ofFaileddue to a liveness test timing out. Eventually the process will complete and the status will change toReadyso be patient and carefully check the event logs to understand what is going on before assuming a reported failure is actually a failure.

Generate Key in Operator

Once complete click on the Generate Key tab and then click the Create GenerateKey button. This will create a secret resource based on the key name given. Click new key and then click the Resources tab to see the secret. Click the secret and then click the Reveal values link at the bottom to view the API Key. Make note of this key as it will be required later during the Maximo Application Suite install.

Generate Key in BAS Dashboard

Open a browser and navigate to

https://dashboard-ibm-bas.apps.[DOMAIN]where [DOMAIN] is your cluster domain value.You may be presented with a request to login with similar to below:

Clicking the Log in with OpenShift button will take you to the OKD login screen.

Enter your OKD credentials to login.

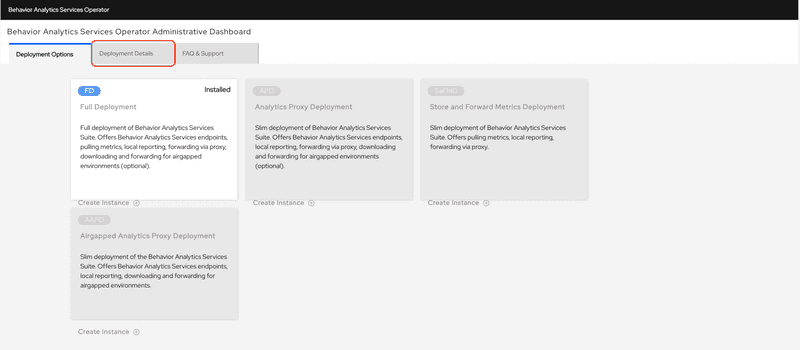

Verify that the Full Deployment has been successfully installed. You should see the word "Installed" in green in the Full Deployment tile. Click the Deployment Details button.

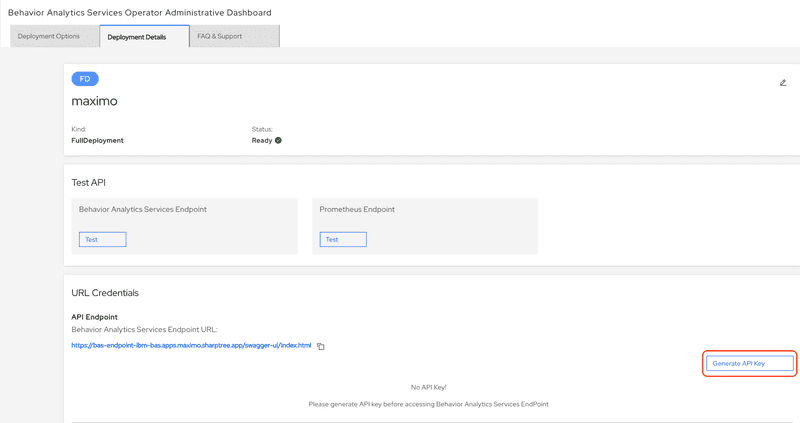

Click the Generate API Key button.

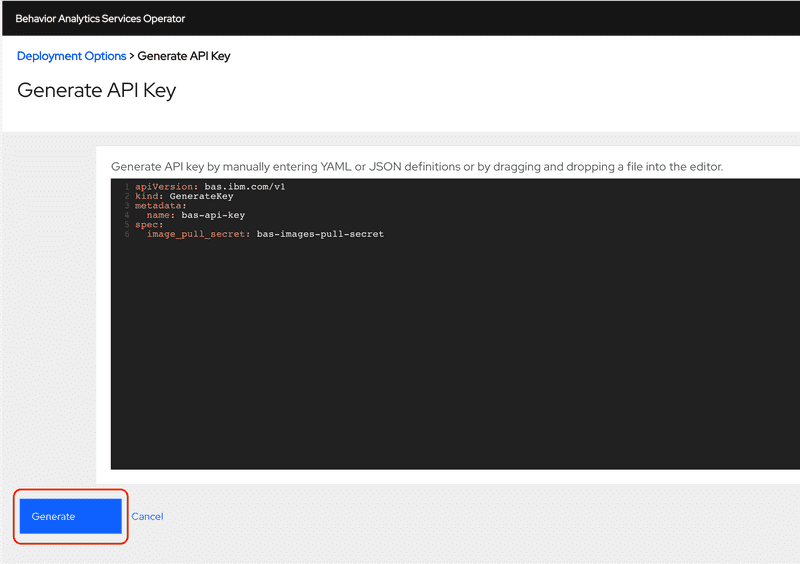

Then click the Generate button.

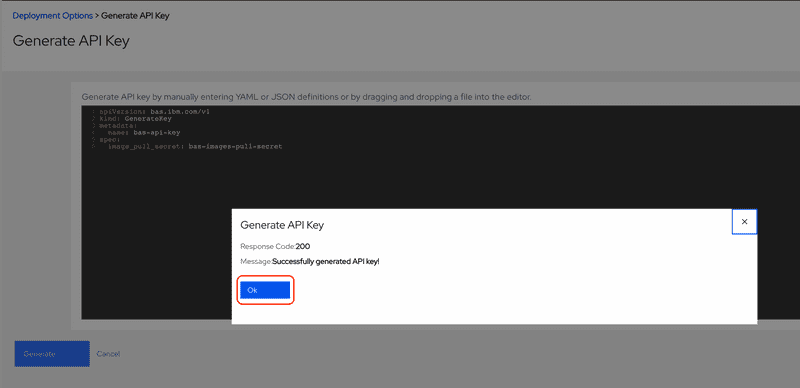

Click the Ok button the confirm that the API key generation request was accepted.

After clicking Ok you will be returned to the Generate API Key screen and there will be no confirmation that the key was generated. Click the Cancel button to return to the previous screen.

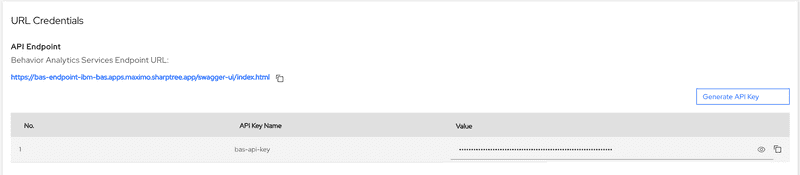

It may take a moment, but the key will generate and will be available, you may need to refresh the browser to view the key once it is generated. Click the Eye icon to view and copy the API Key or click the Copy icon to copy it directly. Save this for the next steps.

7.1. These steps can be performed in the terminal by running the following command:

oc apply -f - >/dev/null <<EOFapiVersion: bas.ibm.com/v1kind: GenerateKeymetadata:name: bas-api-keynamespace: ibm-basspec:image_pull_secret: bas-images-pull-secretEOF7.2. This will take a moment to complete. You can then get the API Key value from the

bas-api-keySecret in theibm-basnamespace. You can get value by running the following command:echo $(oc get secret bas-api-key -n ibm-bas -o json | jq -r ".data.apikey") | base64 -d && echo ""

Install IBM Suite Licensing Service (SLS)

Create a new project called ibm-sls.

oc create namespace ibm-slsRun the following command, replacing the password with the password used when creating the ibm-sls project user.

oc apply -f - >/dev/null <<EOFapiVersion: v1kind: Secrettype: Opaquemetadata:name: sls-mongo-credentialsnamespace: ibm-slsstringData:username: 'admin'password: '[YOUR_PASSWORD_FROM_MONGO_INSTALL]'EOFThe current version of SLS does not deploy correctly in OKD 4.6 and therefore we must manually install the operator and ensure it does not upgrade beyond version

3.2.0. We will follow a similar procedure to the the Service Binder, where we ensured it did not upgrade beyond version0.8.Create the operator group by running the following command.

oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1kind: OperatorGroupmetadata:name: ibm-slsnamespace: ibm-slsspec:targetNamespaces:- ibm-slsEOFCreate the subscription by running the following command. Note that the

installPlanApprovalis set toManual.oc apply -f - >/dev/null <<EOFapiVersion: operators.coreos.com/v1alpha1kind: Subscriptionmetadata:name: ibm-slsnamespace: ibm-slsspec:channel: 3.xname: ibm-slssource: ibm-operator-catalogsourceNamespace: openshift-marketplacestartingCSV: ibm-sls.v3.2.0installPlanApproval: ManualEOFRun the following command to get the install plan for the SLS subscription.

installplan=$(oc get installplan -n ibm-sls | grep -i ibm-sls | awk '{print $1}'); echo "installplan: $installplan"Run the following command, replacing

[INSTALL_PLAN_NAME]with the value from the previous step, to approve the install plan and install the SLS operator.oc patch installplan [INSTALL_PLAN_NAME] -n ibm-sls --type merge --patch '{"spec":{"approved":true}}'After the installation completes, navigate to the

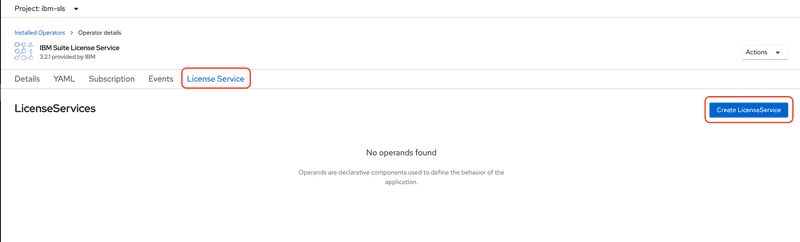

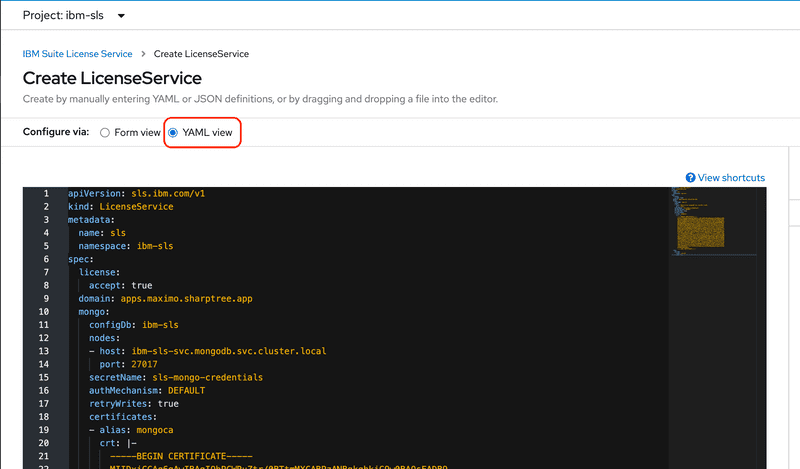

Operators>Installed Operatorsthen select the SLS operator, then click theLicense Servicetab and finally click theCreate LicenseServicebutton to create the new license service.Click the YAML view radio button to view the YAML deployment descriptor.

When performing the MongoDB deployment, there was a step where you exported the MongoDB CA PEM. It should still be in the

./mongodb/ca-pemfile. Otherwise you can run the following command to extract it.oc get secret ibm-sls-mongo-tls -n mongodb -o json | jq '.data["ca.crt"]'| sed -e 's/^"//' -e 's/"$//' | base64 --decodeGet the value from the

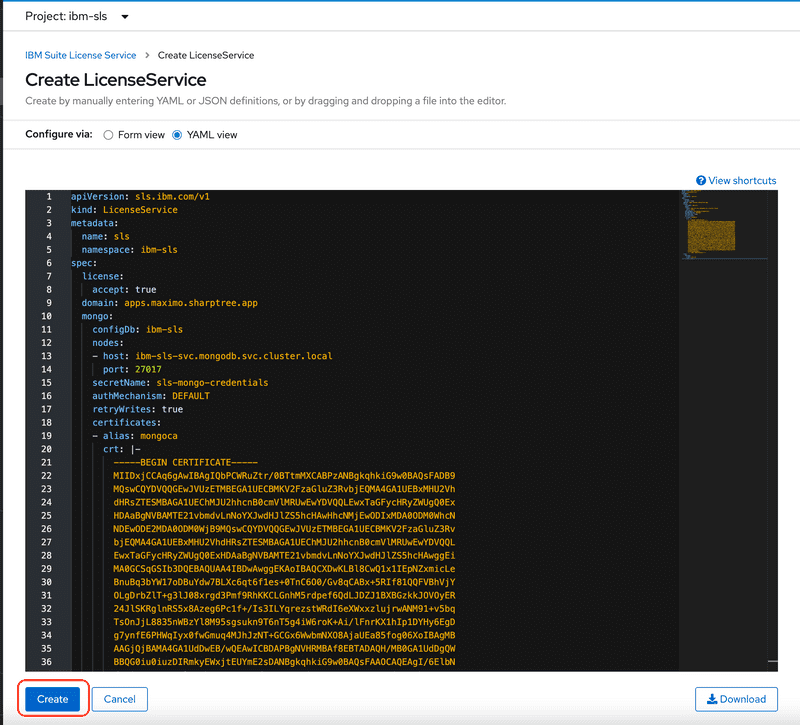

ca-pemfile and replace the[CA_PEM]value below with the certificate value. Be mindful of the indentations as the whole certificate must be indented below thecrt: |-key. Paste following into the Create LicenseService YAML editor, replacing the default value.apiVersion: sls.ibm.com/v1kind: LicenseServicemetadata:name: slsnamespace: ibm-slsspec:license:accept: truedomain: apps.maximo.sharptree.appmongo:configDb: ibm-slsnodes:- host: ibm-sls-0.ibm-sls-svc.mongodb.svc.cluster.localport: 27017- host: ibm-sls-1.ibm-sls-svc.mongodb.svc.cluster.localport: 27017- host: ibm-sls-2.ibm-sls-svc.mongodb.svc.cluster.localport: 27017secretName: sls-mongo-credentialsauthMechanism: DEFAULTretryWrites: truecertificates:- alias: mongocacrt: |-[CA_PEM]rlks:storage:class: do-block-storagesize: 5GThe deployment process may take several minutes. As with the other deployments, be patient and wait for the deployment to have a status of

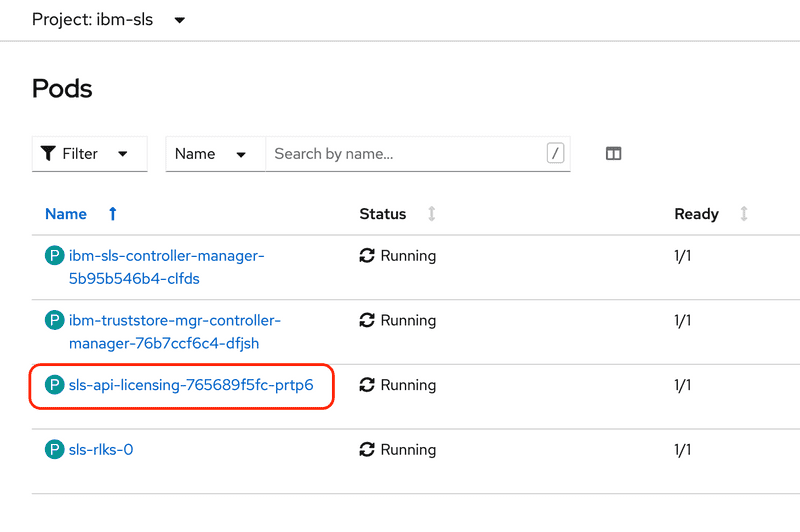

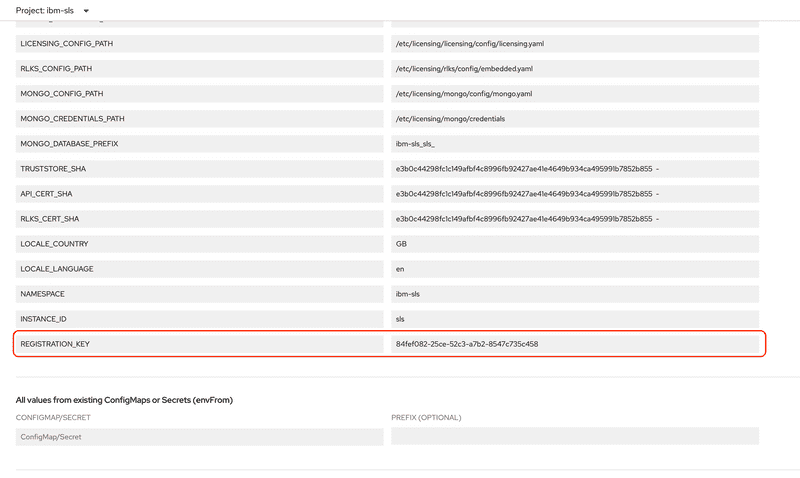

Ready.When the deployment is complete there will be a pod with a name starting with

sls-api-licensingand a label ofapp=sls-api-licensing.Click the pod link and then click the

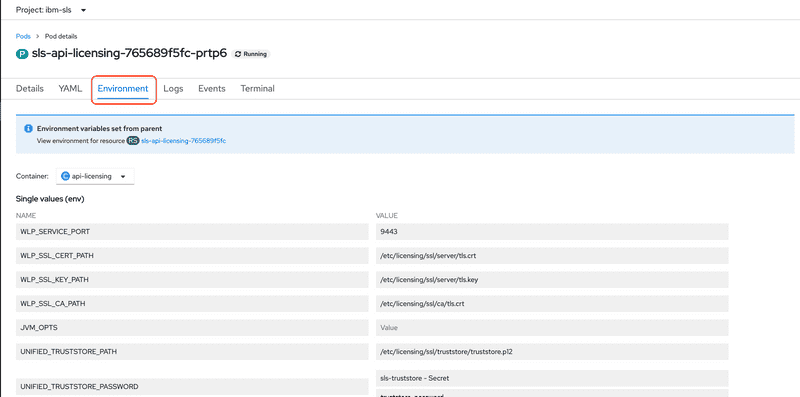

Environmenttab.Then scroll to the bottom and find the

REGISTRATION_KEYenvironment entry and make note of its value.Alternatively you can run the following command that will print the

REGISTRATION_KEYvalue.oc get pod -n ibm-sls --selector='app=sls-api-licensing' -o yaml | yq -r '.items[].spec.containers[].env[] | select(.name == "REGISTRATION_KEY").value'

Conclusion

You now have all the supporting components needed to deploy Maximo Manage available, including BAS, SLS, and MongoDB.

The next step is deploying and configuring Manage, this completes the series and provides a working Maximo Manage instance.

If you have questions or would like support deploying your own instance of MAS 8 Manage, please contact us at [email protected]

Change Log

| Date | Changes |

|---|---|

| 2022/05/09 | Updated references and instructions for MAS 8.6 and forcing SLS version 3.2.0 |